AI Explainability Requirements Automotive

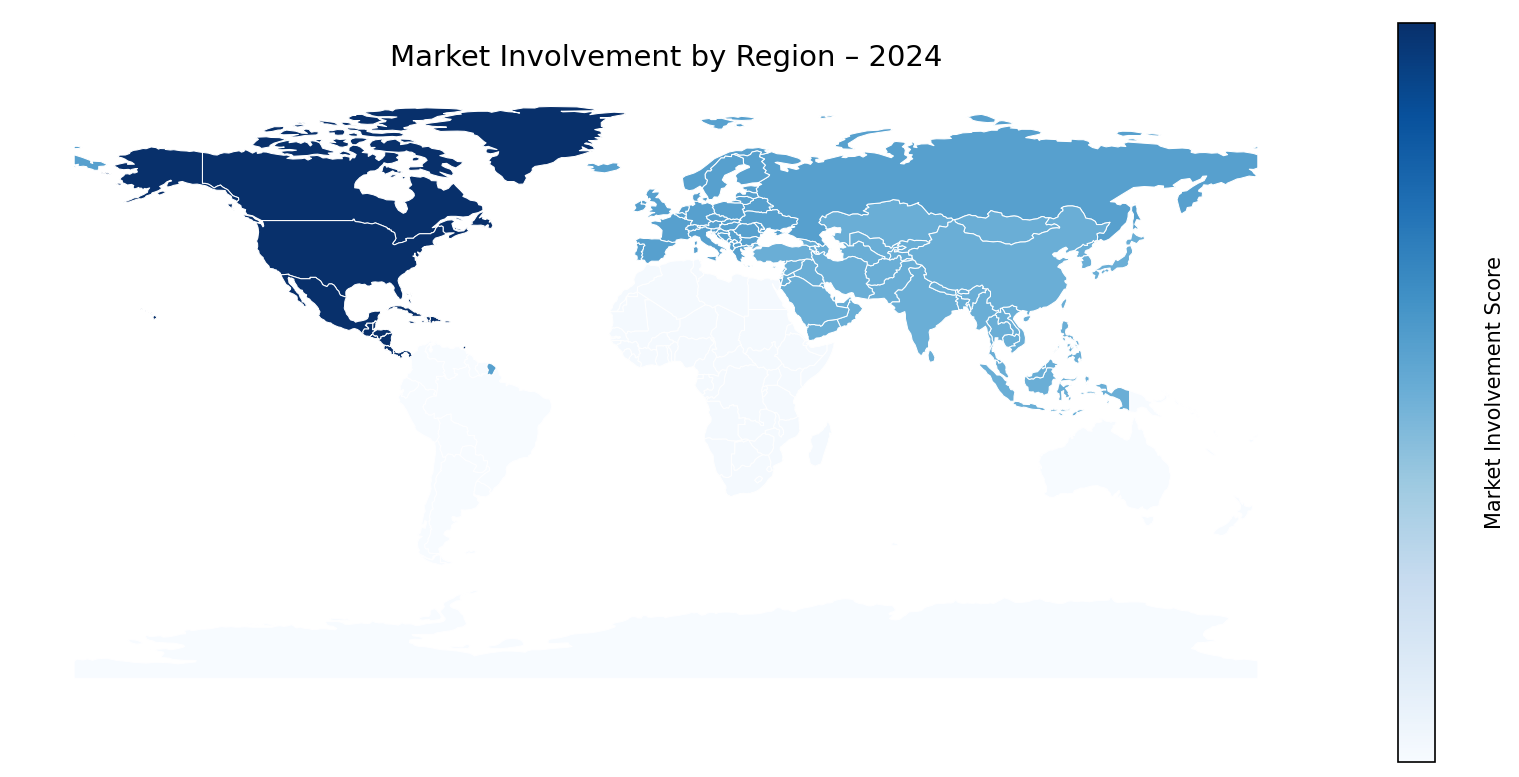

The term "AI Explainability Requirements Automotive" refers to the essential guidelines and frameworks that govern how artificial intelligence systems in the automotive sector should operate transparently and understandably. This concept is increasingly relevant as the automotive landscape evolves with the integration of AI technologies, necessitating clarity on how these systems make decisions. Stakeholders, including manufacturers, regulators, and consumers, require this transparency to ensure safety, trust, and compliance, aligning with the broader transformation driven by AI in operational and strategic frameworks across the sector.

AI-driven practices are fundamentally reshaping the automotive ecosystem, influencing how companies innovate and compete. The ability to explain AI decisions not only enhances stakeholder interactions but also drives efficiency and informed decision-making. As organizations navigate the complexities of integrating AI, they encounter both significant growth opportunities and challenges such as overcoming adoption barriers and managing integration complexities. Balancing the optimism of AI's potential with the realities of evolving expectations is crucial as the sector moves towards a more intelligent and interconnected future.

Unlock AI Potential in Automotive Compliance

Automotive companies should strategically invest in AI explainability initiatives and forge partnerships with technology providers to enhance transparency and trust in AI systems. This approach will not only streamline compliance processes but also drive increased customer confidence and market differentiation through ethical AI practices.

Unlocking the Future: Why AI Explainability is Crucial in Automotive

Regulatory Landscape

Set industry-specific AI explainability standards to ensure compliance. This enhances trust and transparency, crucial for automotive applications. Engage stakeholders to align requirements and overcome potential resistance, improving overall AI integration.

Industry Standards

Incorporate AI-driven insights into decision-making processes to optimize operations. This fosters a data-driven culture and improves responsiveness, facilitating better resource allocation and strategic planning across automotive supply chains.

Technology Partners

Implement strict data governance to ensure high-quality data sources for AI models. This minimizes biases and inaccuracies, which enhances AI reliability and supports better decision-making in automotive applications, driving innovation.

Internal R&D

Perform regular audits of AI systems to ensure they meet explainability standards and regulatory requirements. This proactive approach identifies issues early, improving system reliability and fostering stakeholder confidence in AI technologies.

Industry Standards

Develop training programs to enhance understanding of AI explainability among stakeholders. This empowers teams to leverage AI capabilities effectively while addressing concerns, ultimately driving adoption and improving operational efficiency in automotive contexts.

Cloud Platform

In the automotive sector, explainability is not just a regulatory requirement; it is essential for building trust and ensuring safety in AI systems.

– Dr. Paul Noble, AI Ethics Expert at MITAI Governance Pyramid

Checklist

Compliance Case Studies

Seize the competitive edge in automotive AI explainability. Transform your operations and ensure compliance with standards that drive innovation and trust.

Risk Senarios & Mitigation

Failing ISO Compliance Standards

Legal penalties arise; ensure regular compliance audits.

Neglecting Data Security Measures

Data breaches occur; implement robust encryption protocols.

Overlooking Algorithmic Bias Issues

Consumer trust erodes; conduct regular bias assessments.

Experiencing Operational Failures

Production halts; establish contingency response plans.

Assess how well your AI initiatives align with your business goals

Glossary

Work with Atomic Loops to architect your AI implementation roadmap — from PoC to enterprise scale.

Contact NowFrequently Asked Questions

- AI Explainability Requirements Automotive ensure transparency in AI decision-making processes.

- They enhance trust among stakeholders by providing clarity on AI behavior.

- These requirements help in compliance with industry regulations and standards.

- Organizations can improve AI model performance by understanding decision factors.

- Adopting these requirements fosters a culture of ethical AI use in automotive applications.

- Start by assessing current AI systems to identify explainability gaps.

- Engage stakeholders to understand their concerns and expectations regarding AI outputs.

- Select appropriate tools and frameworks that support AI explainability initiatives.

- Train teams on best practices for interpreting AI model decisions effectively.

- Monitor and iterate on the implementation process to continuously improve explainability.

- Enhanced customer trust leads to stronger brand loyalty and market share.

- Improved regulatory compliance avoids potential legal setbacks and fines.

- Faster identification of model biases allows for better decision-making.

- Companies can leverage insights to optimize product development and operations.

- Achieving explainability can lead to a competitive edge in the marketplace.

- Prioritize AI Explainability early in the AI development lifecycle for best results.

- Implementing explainability before deployment reduces risks of unforeseen issues.

- As regulations evolve, organizations should proactively align with emerging requirements.

- Before scaling AI solutions, ensure that explainability measures are in place.

- Continuous monitoring of AI systems can prompt timely adjustments to explainability efforts.

- Resistance from teams unfamiliar with AI technologies can hinder progress.

- Complexity of existing AI models may complicate the explainability process.

- Limited resources can strain the implementation of comprehensive explainability measures.

- Balancing explainability with model performance requires careful consideration.

- Ongoing training and education are essential to address knowledge gaps in teams.

- Understanding industry standards is crucial for compliance with AI regulations.

- Regular audits help ensure adherence to both local and global compliance guidelines.

- Documentation of AI decision processes supports transparency requirements.

- Engagement with legal advisors can clarify regulatory obligations concerning AI.

- Proactive compliance strategies can mitigate risks of penalties and reputational damage.

- Establish clear KPIs to track improvements in model transparency and stakeholder trust.

- Conduct surveys to gauge stakeholder satisfaction with AI decision-making processes.

- Monitor compliance metrics to ensure adherence to regulatory requirements.

- Analyze feedback from teams on the usability of AI explainability tools.

- Regularly review performance metrics to identify areas for ongoing enhancement.